Creating a Private Service Connect Apache Kafka Cluster

This guide describes how to provision a Private Service Connect Kafka Cluster on the Instaclustr Platform. Private Service Connect is a feature provided by Google Cloud Provider that enables private connectivity between Google Cloud services and applications in your Virtual Private Cloud (VPC). By using PSC, you can establish a private data flow between Google Cloud services and your own VPC without exposing your data to the public internet, thereby increasing security. More information from GCP about PSC is available here.

Overview

A Kafka cluster with Private Service Connect feature enabled has its Kafka nodes accessible via Private Service Connect Service Attachments, which are resources that are used to create Private Service Connect published services. Kafka client applications can connect to the cluster from a VPC network using Private Service Connect Endpoints, which are created by deploying forwarding rules that reference the Private Service Connect Service Attachments. Traffic remains entirely within Google Cloud when connecting to a Private Service Connect Kafka cluster.

On a high level, client applications are connected to Kafka cluster using the below workflow:

Each Kafka PSC cluster has 3 published Private Service Connect Service Attachment which can be connected from the client VPC. Each Service Attachment is in its own private subnet inside the Kafka PSC Cluster Network.

Limitations

- Enterprise add-ons arent yet supported, but will be supported soon with Kafka GCP Private Service Connect clusters..

- If you wish to perform operations via Kafka admin API (e.g. reassigning topic partitions, creating users), please either contact Instaclustr, or do it via the Instaclustr API endpoints if your operations are related to topics, users or ACLs. We’re working to add support for these incrementally over time.

- Private Service Connect Kafka clusters do not support mTLS at the moment, support for it will be added later.

Provisioning a cluster via Instaclustr Console

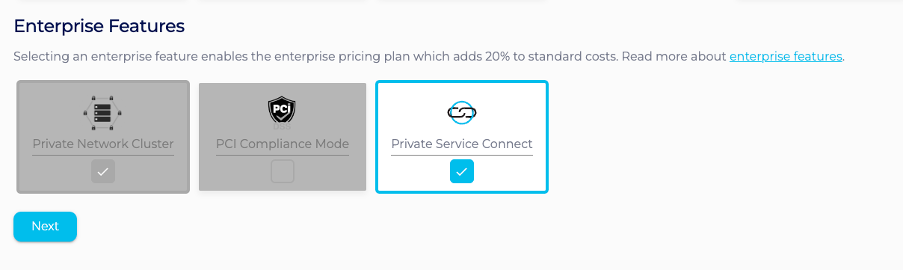

1 – Login to the Instaclustr Console and provision a new Kafka cluster and ensure you select the Private Service Connect feature, you will only be able to select this option if the provider selected is GCP.

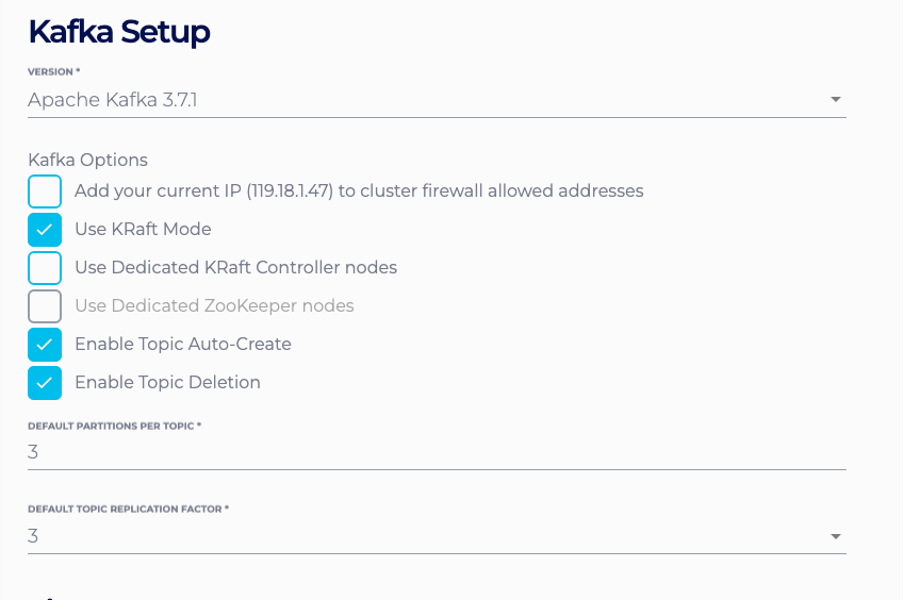

2 – On the next page, specify the Kafka version, among other Kafka options, as you would when creating a standard Kafka cluster.

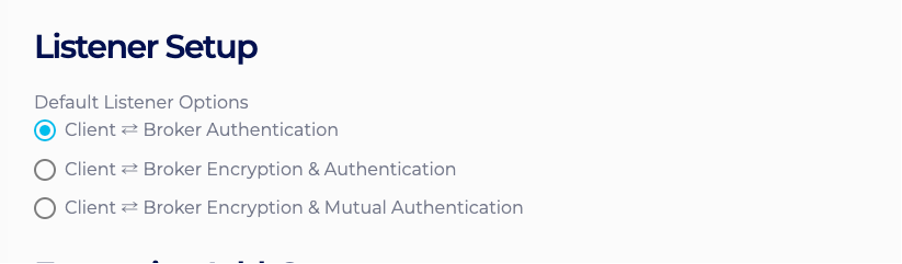

3 – On the same page, enable client-encryption and authentication if required. MTLS support and Enterprise Addons for PSC clusters are being implemented at the moment and will be available soon.

4 – On the next page, enter the Private Service Connect Service Attachment networks CIDR. This CIDR will decide the CIDRs of the subnets that hold the Private Service Connect Service Attachments. If no CIDR is provided, it defaults to the next available CIDR range after the Data Centre network.

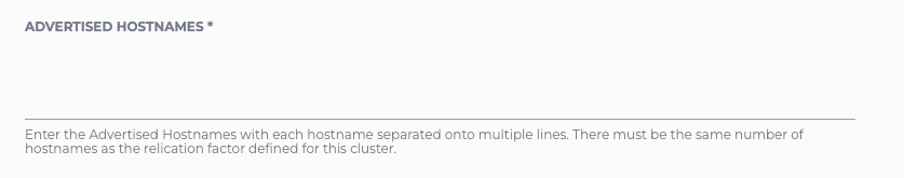

5 – On the same page, enter the Advertised Hostnames. Advertised Hostnames are used by client applications to connect to the cluster. Each Advertised Hostname corresponds to one Private Service Connect endpoint, which in turn connects to one Service Attachment exposed by the cluster. For instance, if Advertised Hostnames provided are

us-central1a.kafka.test.com, us-central1b.kafka.test.com, us-central1c.kafka.test.com

Then client applications would connect to the cluster using these hostnames with the port number being 9091.

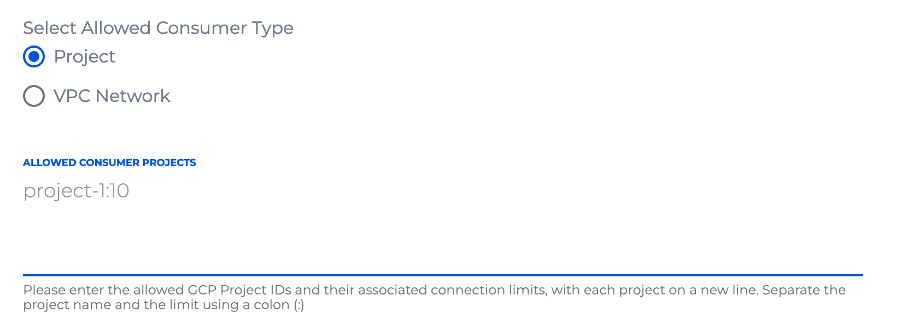

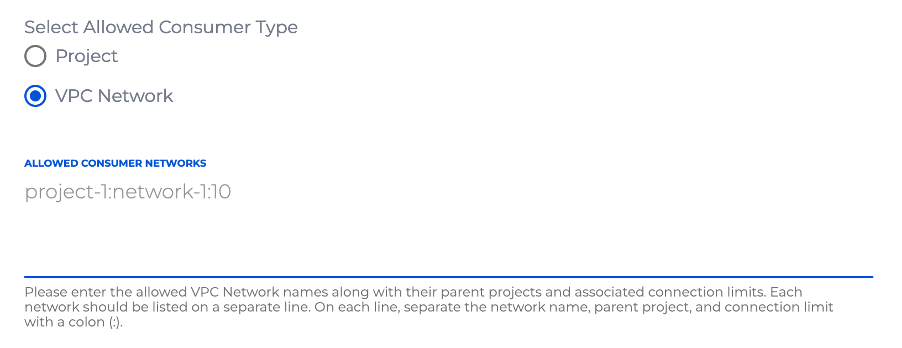

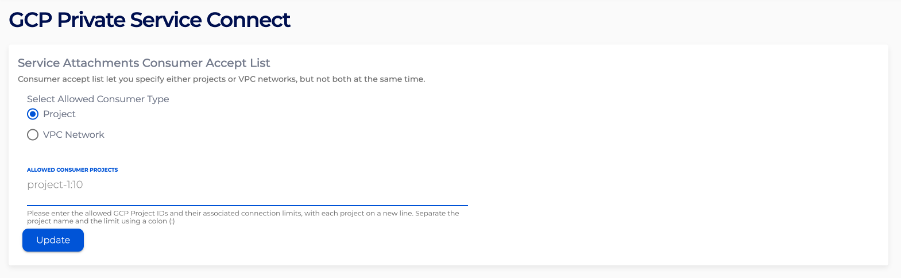

6 – On the same page, select the Allowed Consumer Type and enter the Allowed Consumer Projects/Networks also known as Consumer Accept List in GCP. The service attachment only accepts inbound connection requests if the consumer project or VPC network is on its consumer accept list. Otherwise, connections will be in the pending state and can be accepted manually via GCP console.

Consumer accept list let you specify projects or VPC networks but not both at the same time. Both project-based and network-based accept lists take in the project ID as a main reference. The network-based accept list additionally accepts the network name of the project. At the end of the string, specify the connection limit which is the number of consumer endpoints or backends that can connect to this service and separate them all with colon (:). For example, if you would like to allow 100 connections from project ID instaclustr then put instaclustr:100 into the text box. To narrow the scope to network, update the item to instaclustr:<network-name>:100.

The list can also be updated later via Console GCP Private Service Connect update page. We enable connection reconciliation by default, hence adding or removing items from the list will affect the existing connections. For instance, when you remove a project from the accept list, all connections of that project are transitioned to pending.

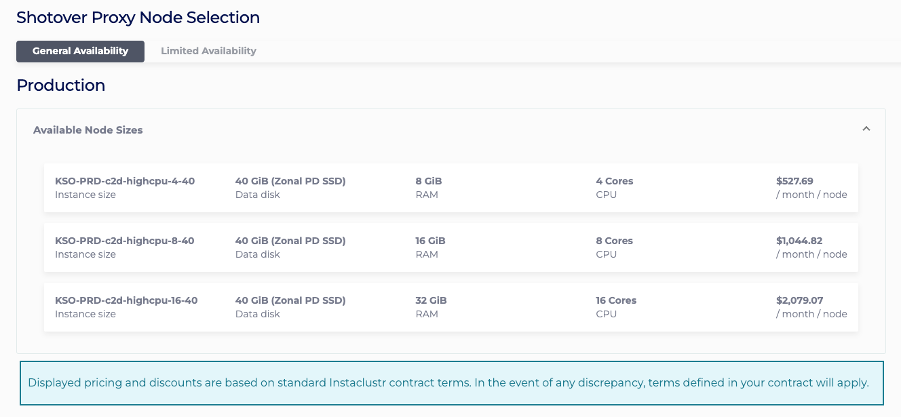

7 – On the same page, select and Kafka node size respectively. Shotover is a reverse proxy application that would reroute traffics coming from Kafka client to Kafka nodes, each Shotover instance serves as an aggregation of Kafka nodes that are in the same rack. As a general guideline, you should choose Shotover node sizes based on the expected amount of throughput the cluster would handle:

For throughput < 1GB/s, choose KSO-PRD-c2d-highcpu-4-40

For throughput between 1GB/s and 2GB/s, choose KSO-PRD-c2d-highcpu-8-40

For throughput > 2GB/s, choose KSO-PRD-c2d-highcpu-16-40

Note, the above serves as a general guideline, the actual node sizes required would vary based on several factors, such as number of Kafka nodes, average message size, number of partitions etc. You could always resize the Shotover instances size via the Console or API based on your actual workload.

8 –Under the Confirmation page, check that the configurations such as Advertised Hostnames are properly configured, accept Instaclustr terms and conditions, and click Create Cluster.

Please contact [email protected] if you have any questions.

Provisioning a cluster via Terraform

Below is an example for provisioning a Private Service Connect cluster via Terraform

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 |

terraform { required_providers { instaclustr = { source = "instaclustr/instaclustr" version = ">= 2.0.0, < 3.0.0" } } } provider "instaclustr" { terraform_key = "Instaclustr-Terraform <username>:<provisioning API key>" } resource "instaclustr_kafka_cluster_v3" "kafka-gcp-psc" { name = "<cluster_name>" private_network_cluster = true sla_tier = "<NON_PRODUCTION/PRODUCTION>" allow_delete_topics = true auto_create_topics = true bundled_use_only = false client_broker_auth_with_mtls = false client_to_cluster_encryption = true data_centre { cloud_provider = "GCP" name = "<DC name>" network = "<network>" provider_account_name = "<provider account name>" region = "<region>" node_size = "<nod>" number_of_nodes = 3 private_connectivity { gcp_private_service_connect { gcp_private_service_connect_rack { advertised_hostname = "<rack 1 hostname>" } gcp_private_service_connect_rack { advertised_hostname = "<rack 2 hostname>" } gcp_private_service_connect_rack { advertised_hostname = "<rack 3 hostname>" } shotover_proxy { node_size = "<shotover node size>" } } } } default_number_of_partitions = 3 default_replication_factor = 3 kafka_version = "<kafka version>" kraft { controller_node_count = 3 } pci_compliance_mode = false } |

See https://registry.terraform.io/providers/instaclustr/instaclustr/latest/docs/resources/kafka_cluster_v3 for more information.

Example of optional Private Service Connect consumer accept list creation via Terraform

|

1 2 3 4 5 6 7 8 |

resource "instaclustr_gcp_service_attachments_accept_list_v2" "gcp-psc-service-attachments-accept-list" { cdc_id = "<cluster_data_centre_id>" consumer_accept_list { accept_project_id = "<project_id>" accept_network_name = "<network_name>" connection_limit = 10 } } |

See https://registry.terraform.io/providers/instaclustr/instaclustr/latest/docs/resources/gcp_service_attachments_accept_list_v2 for more information.