Overview

Serverless functions on Kubernetes combine the benefits of serverless architecture—such as on-demand execution and autoscaling—with the container orchestration capabilities of Kubernetes.

In a serverless model, applications or functions run only when triggered, scaling up and down automatically to meet demand without manual intervention. Kubernetes provides the infrastructure layer that manages and scales containerized workloads, making it a strong platform for serverless operations.

By leveraging Kubernetes, serverless workloads can run in a consistent and controlled environment, using containers to isolate functions and dependencies. This approach is useful in hybrid and multi-cloud environments, where Kubernetes clusters serve as a unified platform for deploying and scaling serverless functions.

This is part of a series of articles about data architecture

Benefits of serverless on Kubernetes

Deploying serverless on Kubernetes brings multiple advantages, including:

- Scalability and resource efficiency: Serverless functions on Kubernetes automatically scale based on demand, which reduces idle resources and improves cost-efficiency. Kubernetes’ Horizontal Pod Autoscaler (HPA) ensures that workloads expand or contract as needed, optimizing resource usage.

- Unified management across environments: Kubernetes allows organizations to run serverless functions across on-premises, cloud, and hybrid environments. This unification simplifies operations and provides a consistent infrastructure for deploying and managing applications.

- Flexibility in language and runtime: Unlike traditional serverless environments, which may restrict languages or runtimes, Kubernetes-based serverless solutions support functions written in any language, packaged as container images. This flexibility benefits developers working with diverse languages and frameworks.

- Enhanced observability and debugging: Kubernetes integrates well with monitoring and logging tools like Prometheus and Grafana, providing insights into function performance, latency, and resource usage. This observability enables easier troubleshooting and performance tuning.

- Integration with Kubernetes ecosystem: By running serverless workloads on Kubernetes, organizations can leverage Kubernetes-native tools and services, such as service meshes, networking, and security controls. This integration offers greater control over application behavior and security in complex environments.

- Reduced vendor lock-in: Kubernetes serverless frameworks are often open source and can run on any Kubernetes distribution. This reduces dependency on a single cloud provider, allowing organizations to avoid vendor lock-in and gain more flexibility in choosing where and how to run their workloads.

How serverless architecture works with Kubernetes

Serverless architecture on Kubernetes operates by running functions or applications in response to events, without requiring developers to manage the underlying infrastructure directly. Kubernetes serves as the orchestration layer, managing these functions as containerized workloads, and handling aspects like scheduling, scaling, and failover:

- Event-driven execution: In serverless Kubernetes environments, functions are triggered by events, which can be HTTP requests, queue messages, or database updates. Event sources connect to the serverless platform through APIs or messaging protocols, delivering the events that initiate function execution. Serverless frameworks like Knative Eventing or OpenFaaS allow developers to define event triggers for their functions.

- Autoscaling and resource management: Serverless frameworks on Kubernetes use Kubernetes’ Horizontal Pod Autoscaler (HPA) and other scaling mechanisms to scale functions based on demand. When a function is triggered, Kubernetes rapidly provisions containers to handle incoming requests, scaling up when demand increases and down when it subsides. This elasticity optimizes resource usage and minimizes costs by ensuring that compute resources are used only when necessary.

- Isolation and lifecycle management: Each serverless function runs within its container, providing isolation between functions and ensuring that dependencies and runtime environments do not interfere. Kubernetes manages the lifecycle of these containers, including initiating, restarting, or terminating them as required. This isolation and lifecycle management are essential for maintaining security and reliability in multi-tenant or complex applications.

- Observability and control: Kubernetes integrates with logging and monitoring tools like Prometheus and Grafana, providing visibility into function performance, resource consumption, and execution latency. These insights help administrators optimize serverless operations by identifying bottlenecks, troubleshooting issues, and tuning function configurations.

Related content: Read our guide to data architecture principles

Tips from the expert

Andrew Mills is an industry leader with extensive experience in open source data solutions and a proven track record in integrating and managing Apache Kafka and other event-driven architectures.

In my experience, here are tips that can help you better implement and optimize serverless Kubernetes environments:

- Leverage custom resource definitions (CRDs) for advanced configurations: Extend Kubernetes’ native capabilities by creating custom resource definitions for the serverless framework. This approach allows you to integrate serverless-specific requirements, like custom triggers or advanced scaling policies, without cluttering the core Kubernetes resources.

- Design for stateful workloads with minimal state coupling: While serverless is inherently stateless, many applications require state management. Use external state storage systems, such as Valkey™/Redis™, DynamoDB, or etcd, and ensure the serverless functions interact with these efficiently to avoid coupling that limits scalability.

- Employ function chaining with orchestration tools: Use orchestration tools like Argo Workflows or Knative Eventing to design workflows where functions trigger each other in sequence. This simplifies the development of complex workflows and ensures consistent management and monitoring of interconnected serverless operations.

- Optimize function packaging for Kubernetes: Build lean container images by stripping unnecessary dependencies and using minimal base images like

distroless. Smaller images reduce deployment times and cold start latency, especially critical in high-frequency serverless use cases. - Utilize service meshes for granular control: Incorporate service meshes like Istio or Linkerd to manage networking, security, and traffic for serverless functions. Service meshes provide fine-grained observability, rate-limiting, and advanced routing capabilities, improving the control and reliability of serverless workloads.

Key serverless frameworks for Kubernetes

Several frameworks have been developed to provide serverless capabilities on Kubernetes. These tools simplify deployment and management of serverless functions on Kubernetes clusters.

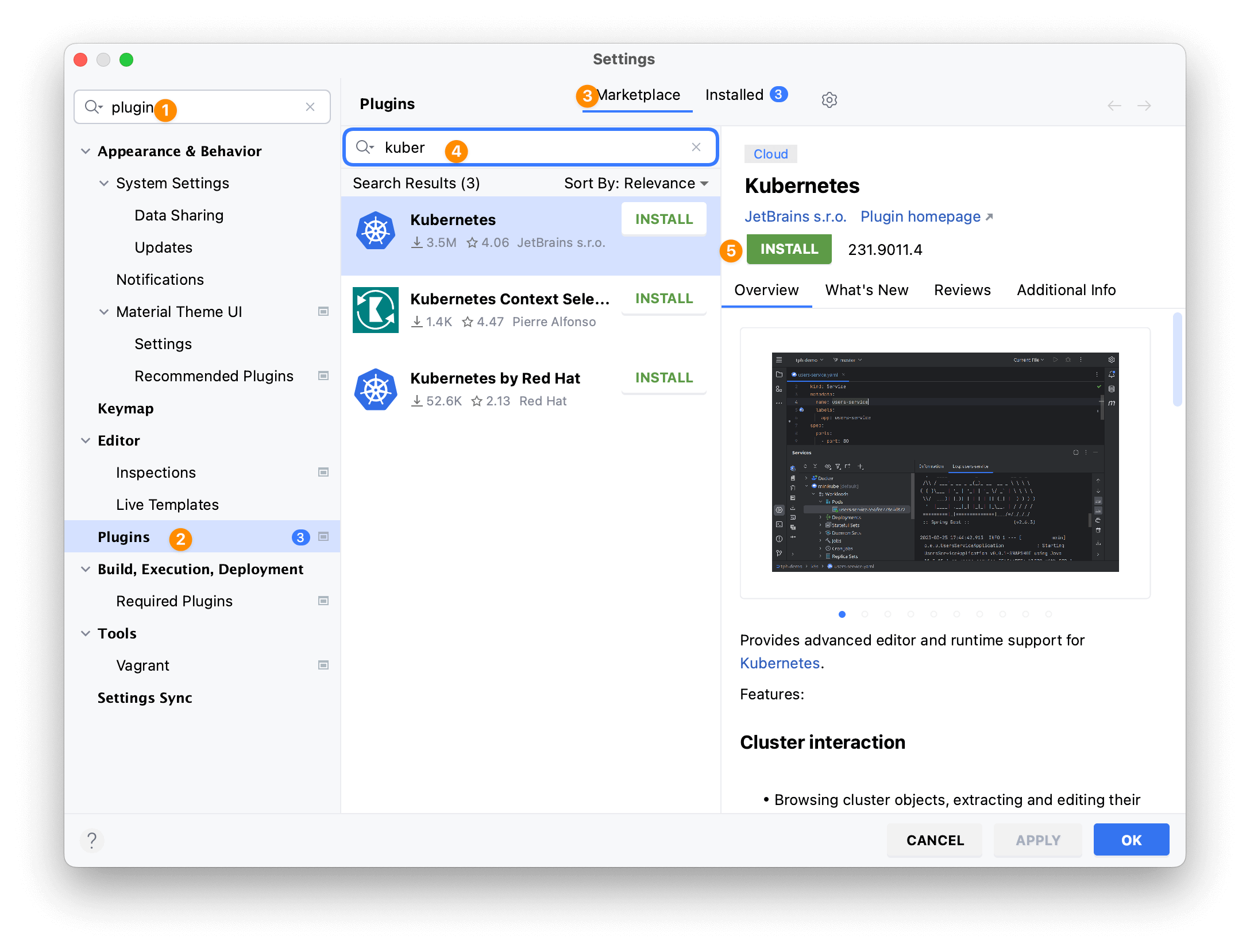

1. Knative

Knative is a Kubernetes-based platform that provides building blocks to create, deploy, and manage serverless workloads. It focuses on offering a developer experience with features for deploying source code directly and managing application lifecycle. Its architecture supports auto-scaling, event-driven consumers, and simplified deployments.

License: Apache-2.0

Repo: https://github.com/knative/serving

GitHub stars: 5k+

Contributors: 250+

One of Knative’s main components is Knative Serving, which manages the running and scaling of serverless containers. It incorporates service management, routes, configurations, and revision management for applications. Knative Eventing handles the delivery of events to workloads, allowing for event-driven application architectures.

Source: Knative

2. OpenFaaS

OpenFaaS is a serverless framework that simplifies the deployment of functions on Kubernetes. It emphasizes developer simplicity, enabling functions to be written in any programming language and packaged as containers. OpenFaaS focuses on a developer experience with tooling that automates building and deploying functions with minimal code changes.

License: MIT, (CE) EULA

Repo: https://github.com/openfaas/faas

GitHub stars: 25k+

Contributors: 150+

OpenFaaS uses a simple function definition format and leverages Kubernetes’ auto-scaling capabilities to manage resource allocation efficiently. It supports event triggers and a range of integrations, increasing its versatility in serverless applications. The framework also offers UI and monitoring tools, which help manage and observe functions in a Kubernetes environment.

Source: OpenFaaS

3. Kubeless

Kubeless is a Kubernetes-native serverless framework to ease the deployment of small functions. It abstracts Kubernetes’ complexities by providing CLI tools and APIs for deploying serverless functions without extensive configuration. Kubeless supports multiple languages and is focused on integrating with Kubernetes-native resources and services.

License: Apache-2.0

Repo: https://github.com/vmware-archive/kubeless

GitHub stars: 6k+

Contributors: 100+

Functions in Kubeless can be triggered by HTTP requests or pub/sub systems like Kafka, making it versatile for various application needs. The framework allows developers to define triggers, enabling event-driven function execution. Its lightweight nature and tight Kubernetes integration make Kubeless suitable for developers who favor simpler and more direct solutions.

4. Fission

Fission is an open-source serverless function platform for Kubernetes, offering high speed in deployment. It can handle high volumes of functions and automatically scales based on demand. Fission leverages a pool of pre-warmed containers to handle function workloads, addressing the cold-start problem commonly associated with serverless architectures.

License: Apache-2.0

Repository: https://github.com/fission/fission

GitHub stars: 8K+

Contributors: 150+

Fission emphasizes ease-of-use, supporting code updates without building container images. This feature simplifies the deployment process, enabling developers to focus on code development while Fission manages the operational aspects. It supports various language runtimes and offers an HTTP-based event system, providing a flexible serverless implementation on Kubernetes.

Source: OpenFaaS

Best practices for serverless on Kubernetes

Leveraging serverless on Kubernetes requires adhering to several practices for optimal performance and cost efficiency.

Use event-driven architectures

Event-driven architectures are fundamental to serverless operations, allowing functions to react to triggers such as data updates, queue messages, or HTTP requests. This strategy ensures that resources are used only when necessary, optimizing both performance and cost. Implementing a pub/sub messaging model can simplify event processing and distribution.

In Kubernetes, integrating event sources with serverless frameworks like Knative’s eventing module improves scalability and flexibility. Properly defined events ensure that functions are triggered and executed to meet user demands.

Optimize cold start times

Cold start times refer to the latency experienced when initiating serverless functions. Optimizing these is crucial for application responsiveness. Techniques such as reducing container image sizes and keeping a pool of pre-warmed instances ready for requests can mitigate cold start delays. Efficient caching and optimizing initialization code also aid in minimizing these latencies.

Using lightweight runtimes and languages can further reduce the burden of cold starts. Implementing lazy loading, where only necessary components are loaded at startup, aids in faster execution. By addressing cold start times, developers ensure that serverless functions on Kubernetes are prompt and efficient.

Monitor and log functions effectively

Effective monitoring and logging are crucial to managing serverless functions on Kubernetes. They provide insights into function performance and help in diagnosing and resolving issues promptly. Implementing comprehensive logging practices involves capturing function inputs, outputs, and any errors. This visibility helps in optimizing function performance and debugging.

Utilizing monitoring tools, such as Prometheus and Grafana, provides valuable metrics on resource utilization, latency, and throughput. Alerting mechanisms can be configured to notify when performance thresholds are breached.

Secure your serverless functions

Securing serverless functions on Kubernetes involves several best practices to protect applications and data. Begin by implementing least-privilege access controls, only granting necessary permissions. Monitoring and auditing function actions is crucial for detecting unauthorized activities.

Use of encryption, both in-transit and at-rest, is essential to protect sensitive information. Ensure up-to-date dependencies and apply regular vulnerability scans to mitigate risks from outdated libraries. Network security should be a priority, leveraging Kubernetes’ network policies to restrict unauthorized traffic.

Manage costs with autoscaling

Autoscaling is a useful feature for managing costs in serverless Kubernetes environments. It allows functions and resources to dynamically scale in response to current demand, ensuring cost-efficiency without compromising performance. Using Horizontal Pod Autoscaler in Kubernetes, functions can automatically adjust based on metrics like CPU usage and request rates.

It’s essential to configure appropriate minimum and maximum scaling limits to prevent resource overuse or underutilization. Establishing efficient autoscaling policies ensures that serverless functions maintain optimal resource usage, saving costs while remaining responsive to users.

How Instaclustr enhances serverless Kubernetes workflows

Serverless computing and Kubernetes are two groundbreaking technologies changing the way organizations build and deploy scalable applications. Add Instaclustr to the mix, and you unlock even greater efficiency and simplicity, empowering developers and DevOps teams to focus on creating rather than managing.

The Instaclustr advantage

Instaclustr amplifies the power of Kubernetes in a serverless architecture with its Instaclustr Operator, a tool that simplifies resource provisioning and management. Here’s how it works:

- Direct Cloud Resource Provisioning: The Instaclustr Operator allows you to manage cloud infrastructure directly through Kubernetes.

- Seamless API Integration: Instaclustr eliminates the need for developers to write custom code to connect APIs, simplifying the workflow.

- Streamlined Kubernetes Workflows: Teams can easily integrate Instaclustr provisioning and management within their existing Kubernetes clusters, reducing complexity and ensuring operational consistency.

By removing common manual tasks, Instaclustr empowers developers, operations teams, and site reliability engineers (SREs) to focus on value-driven activities, like optimizing applications or improving user experiences.

Best practices for serverless on Kubernetes

Getting the most out of serverless Kubernetes requires a strategic approach. Here’s how to optimize your workflow with Instaclustr and Kubernetes:

- Leverage Kubernetes Operators: Use the Instaclustr Operator to simplify complex configurations and avoid coding custom integrations.

- Optimize for scalability: Align serverless workloads with Kubernetes’ autoscaling capabilities to ensure consistent performance under varying traffic loads.

- Monitor performance: Use monitoring tools to track resource usage and ensure efficient scaling and uptime.

Implement security best practices: Leverage Kubernetes’ built-in security features and configure access controls to protect sensitive data. - Adopt CI/CD pipelines: Pair serverless architecture with a continuous integration/continuous delivery system to accelerate deployment cycles.

For more information: