What is Apache Cassandra?

Apache Cassandra is an open-source, distributed NoSQL database system for handling large amounts of data across multiple commodity servers. It helps address the scalability and performance challenges of maintaining massive data volumes, offering high availability and a decentralized architecture.

Cassandra can scale horizontally by adding more nodes, ensuring minimal downtime and load balancing across the network. It enables high fault tolerance and resilience, providing assurances against loss of service or data in the event of hardware failures. Its decentralized nature means there is no single failure point.

The database also supports multi-datacenter operations, allowing global deployment while maintaining consistency and performance. This makes Apache Cassandra suitable for various applications, from social media platforms to financial services.

You can get Cassandra at the https://cassandra.apache.org/_/index.html.

The history and evolution of Apache Cassandra

Apache Cassandra’s story began at Facebook in 2008, emerging from a need to support the messaging backend of their social network. It was created to overcome limitations in existing databases, which struggled with the scale and reliability needs of modern web applications.

In 2009, Facebook released Cassandra as an open-source project, allowing developers worldwide to adopt and improve the system. Over the years, Cassandra has seen contributions from major companies such as Twitter, Netflix, and Apache Software Foundation developers.

The project has evolved significantly, introducing features like a query language and improved data modeling capabilities. Regular updates have kept it relevant in a rapidly changing tech landscape, with ongoing improvements focusing on performance, accessibility, and security.

Key features and benefits of Apache Cassandra

Here are some of Cassandra’s most important capabilities.

High availability and fault tolerance

Cassandra provides high availability and fault tolerance through its distributed architecture. Data is replicated across multiple nodes, ensuring no single point of failure. This replication across different geographical regions means that even if one data center goes offline, other replicas can continue to serve requests, maintaining uptime and reliability for users.

Cassandra’s built-in support for automatic node replacement and failure handling ensures the system heals itself in case of node failures. This self-healing ability reduces maintenance efforts, allowing operations to focus on scaling and optimizing rather than managing failures.

Scalability and performance

Horizontal scaling is achieved by adding more nodes to the cluster, distributing the data across new nodes automatically. This process requires no downtime, allowing organizations to handle increased workloads without service disruptions.

Performance is further improved by Cassandra’s ability to read and write data rapidly across distributed networks. The system employs a tunable consistency model, allowing users to balance performance and data integrity based on their needs.

Flexible data model and schema

Cassandra supports various data structures, making it adaptable to numerous use cases. Unlike traditional relational databases, Cassandra enables data organization through its schema-less model, allowing easy adaptation and evolution. This flexibility makes adding new fields or tables simple, enabling shorter development cycles and quicker feature rollouts.

The database’s row-oriented storage allows for efficient query operations even as data structures evolve, with support for collections such as lists, maps, and sets. This capability enables developers to leverage complex data relationships and store rich data types.

Distributed architecture

Cassandra uses a distributed architecture to operate across multiple nodes and data centers. This architecture eliminates central points of control, ensuring scalability and reliability. Each node in a Cassandra cluster can accept read and write requests, distributing workloads evenly to prevent bottlenecks. As demand grows, new nodes can be integrated to extend capacity.

The database also supports eventual consistency, maintaining data congruity over time across all nodes. This asynchrony accommodates network latency without compromising system performance.

Cassandra Query Language (CQL)

CQL, or Cassandra Query Language, interfaces users with the database, simplifying interactions compared to its predecessors. Modeled after SQL, CQL lets developers perform queries, insertions, and updates using a familiar syntax. This approach lowers the learning curve for those transitioning from relational database systems.

Despite its SQL-like syntax, CQL eliminates some traditional constructs, aligning with Cassandra’s distributed nature and enabling more efficient operations. Developers can use it to set up complex data models and execute sophisticated queries without sacrificing speed.

The Apache Cassandra architecture

Apache Cassandra’s architecture is built to support large-scale, fault-tolerant data storage and retrieval across distributed networks, ensuring high availability, horizontal scalability, and resilience. Below are the key components and principles of its architecture:

- Peer-to-peer distributed system: Cassandra uses a peer-to-peer architecture where every node in the cluster is equal and can handle client requests independently. Unlike traditional master-slave models, this setup eliminates a single point of failure and allows any node to serve read and write requests. Nodes communicate through a gossip protocol that shares state information about each other every second, ensuring the cluster is aware of node statuses and failures.

- Cluster organization with data centers and racks: A Cassandra cluster can be subdivided into data centers and racks, reflecting physical infrastructure layouts. This organization aids in fault tolerance and load distribution, with replicas of data often placed across racks or data centers. Using the NetworkTopologyStrategy, users can define replication policies across data centers, enabling resilience to localized failures while maintaining low-latency access for geographically distributed clients.

- Partitioning and token ranges: Data in Cassandra is divided using a partition key, which is hashed by a partitioner to create tokens that determine data distribution across nodes. The default Murmur3Partitioner maps partition keys to a token range, ensuring even distribution. Each node is responsible for a specific token range, which shifts as nodes join or leave the cluster. This flexible partitioning supports Cassandra’s horizontal scaling.

- Virtual nodes (vnodes): To simplify token allocation, Cassandra uses virtual nodes (vnodes), which divide each node’s token range into smaller, manageable segments. Each physical node holds multiple vnodes, promoting balanced data distribution and simplifying token management when nodes are added or removed. By default, each node is assigned 256 vnodes.

- Data replication: Cassandra achieves high availability through configurable replication strategies. Data is replicated across multiple nodes, with each keyspace specifying its replication factor. Cassandra supports two primary replication strategies: SimpleStrategy for single data center setups, and NetworkTopologyStrategy for multi-data center deployments, enabling granular control over replica placement across regions

- Data consistency: Data consistency is tunable, allowing users to specify the number of replicas that must acknowledge reads or writes. This flexibility enables configurations ranging from low-latency operations with eventual consistency to highly consistent setups with strong read/write guarantees.

Write and read paths

The databases supports both read and write paths:

- Write path: When data is written, it is first logged to a commit log for durability. Then, data is stored in a memtable (an in-memory structure) until it is flushed to disk as SSTables (sorted string tables). SSTables are immutable and compacted periodically to consolidate data and optimize disk space.

- Read path: Reads are served by checking various caches (row, partition key, and bloom filters) and then retrieving data from memtables and SSTables. The read repair process helps keep replicas consistent by synchronizing data across nodes during read operations.

Anti-entropy and repair mechanisms

To maintain consistency across replicas, Cassandra uses anti-entropy mechanisms:

- Hinted handoff: Temporarily stores updates intended for unavailable nodes, replaying them once the node is back online.

- Read repair: Compares data versions across replicas during reads and corrects any inconsistencies.

- Manual repair: Processes (e.g., using Merkle trees) detect and resolve discrepancies across replicas, ensuring data consistency over time.

Tips from the expert

Ritam Das

Solution Architect

Ritam Das is a trusted advisor with a proven track record in translating complex business problems into practical technology solutions, specializing in cloud computing and big data analytics.

In my experience, here are tips that can help you better work with Apache Cassandra:

- Choose partition keys carefully: Effective partition keys are essential for even data distribution and avoiding hotspots. Consider your access patterns and distribute keys to prevent all reads/writes from focusing on a few nodes, which could degrade performance.

- Use Time to Live (TTL) for expiring data: Cassandra supports TTL on data, which can help manage disk space and maintain only relevant data. This is especially useful for applications that deal with transient data, like session management or time-series data.

- Understand Cassandra properties, their functions, and relevant effects: When getting started with Cassandra, especially from a SQL background, be sure to understand new operational tasks and the differing of core concepts when operating Cassandra vs SQL. This means maintaining configs and not altering them until you understand the relevant effect (i.e. Don’t use Lightweight Transactions (LWT) until you understand the exact performance tradeoffs). Backup and restores, repairs, tombstone management, and mapping over stored procedures (if coming from SQL) all come to mind as key concepts to understand when operating Cassandra.

- Use the right Hardware: Run extensive load tests and measure that with cassandra-stress. Cassandra can run on commodity servers, but be sure to make the right hardware choice as it can prove incredibly valuable. Refer to this documentation.

- Evaluate the right monitoring tools for you: Monitoring tools such as Prometheus with Grafana provide advanced metrics and insights into cluster health, allowing for more proactive maintenance and rapid issue diagnosis.

Integrating Cassandra with other technologies

Cassandra integrates with other platforms, most notably Apache products like Spark and Kafka.

Using Cassandra with Apache Spark

Integrating Cassandra with Apache Spark combines the database’s data storage capabilities with Spark’s data processing engine. This integration enables real-time analytics and complex computations on large data sets directly within Cassandra, leveraging the in-memory processing power of Spark for rapid query execution.

Key integrations like the DataStax Cassandra Connector support data exchange between Spark and Cassandra, enabling complex data workflows and transformations. Developers can write applications that query Cassandra for fresh data, transform it using Spark, and write results back into Cassandra.

Integrating with Kafka for streaming data

Kafka integration with Cassandra creates a high-velocity data processing pipeline, enabling the more efficient handling of streaming data. Kafka serves as a messaging queue, capturing live data streams and feeding them into Cassandra for durable, on-disk storage.

DataStax’s Kafka Connector allows synchronized data movement between Kafka topics and Cassandra tables, ensuring that systems ingest, process, and store data consistently. This setup ensures near-instantaneous streaming and storage workflows, allowing users to react to real-time data scenarios.

Best practices for Apache Cassandra

Organizations can implement the following practices to ensure the most effective use of Cassandra.

Data modeling guidelines

It’s important to understand query patterns before designing data models. Prioritize read optimization, ensuring data retrieval aligns with anticipated queries to minimize cross-partition requests. Utilize composite keys to partition data intelligently and employ clustering columns for efficient sorting and access patterns.

Additionally, avoid anti-patterns like large partitions or excessive use of secondary indexes, which degrade performance. Design models with a clear understanding of write paths and potential read paths, ensuring that each component of the data model supports fast data access and mutation.

Performance tuning techniques

Adjust key parameters like heap size, cache capacity, and read/write consistency levels to match workloads. Monitoring real-time performance metrics using tools like JMX or third-party software assists in identifying bottlenecks for timely resolution.

Additionally, maintenance of SSTables through optimal compaction strategies ensures disk space efficiency and access performance. Regularly evaluating infrastructure needs against workload patterns aids in determining appropriate cluster scale adjustments.

Deployment and scaling strategies

Deploying Cassandra involves strategic planning to ensure resilience, performance, and cost-effectiveness. Opt for a multi-datacenter deployment model to support fault tolerance and low-latency regional access. Adequate replication strategies and node configurations are essential to distribute loads evenly, preventing bottlenecks and single points of failure.

Scaling strategies should favor horizontal scale-out by adding nodes rather than vertical scaling, maintaining Cassandra’s design benefits. Plan ahead based on forecasted data growth and application demand to ensure infrastructure supports scalability needs without excessive costs.

Backup and recovery procedures

Regular, consistent backups using tools like nodetool snapshot ensure data recoverability, defending against corruption or loss. Additionally, leveraging incremental backup solutions reduces storage overhead, maintaining backups without full repetition each cycle.

In case of failure, restoring from recent backups is simplified with Cassandra’s built-in utilities. Establish clear recovery protocols, including restoration testing, that ensure team readiness and system preparedness for any unexpected data integrity challenges.

Maintenance and upgrading tips

Maintaining and upgrading Cassandra systems demands a thought out plan to ensure minimal disruption. Regularly update nodes independently to reduce availability impact, using rolling upgrades where only a fraction of the cluster is offline. Address schema changes and features incorporation to prevent version conflicts and maintain data compatibility across the cluster.

Perform routine performance audits on node health and data integrity, adjusting configurations or strategies before they potentially degrade system function. Regularly update infrastructure components—such as JVM and underlying OS—to leverage performance improvements and security updates.

Managed Apache Cassandra option

For organizations looking to leverage Cassandra without the complexity of managing infrastructure and maintenance, managed Cassandra services provide a convenient solution. Managed Cassandra options are offered by major cloud providers such as AWS (through Amazon Keyspaces), Microsoft Azure, and Google Cloud, as well as specialized providers like DataStax Astra DB.

These services enable users to access Cassandra’s features—such as high scalability, fault tolerance, and multi-region support—while offloading operational tasks like setup, scaling, and regular maintenance to the provider. They typically handle critical activities like automated backups, patching, monitoring, and node replacements.

Managed Cassandra services also provide enhanced security options, including encryption at rest, network isolation, and compliance with industry standards, which can be more challenging to implement in self-managed environments.

Related content: Read our guide to Apache Cassandra versions (coming soon)

Quick tutorial: Getting started with Apache Cassandra

This quickstart guide walks through setting up Apache Cassandra using Docker and performing basic operations with the Cassandra Query Language (CQL). Follow these steps to set up the environment, create a keyspace and table, and interact with the database. The instructions are adapted from the Cassandra documentation.

Step 1: Pull Cassandra with Docker

First, ensure Docker is installed on your system. Cassandra can be run on Docker, which simplifies the installation process. Pull the latest Cassandra image by running the following command:

|

1 |

docker pull cassandra:latest |

Step 2: Start Cassandra

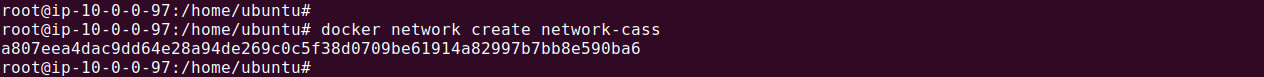

Create a Docker network to run Cassandra in isolated networking, which allows container communication without exposing ports on the host. Then, start a Cassandra container:

|

1 |

docker network create network-cass |

|

1 |

docker run --rm -d --name cassandra-node --hostname cassandra-host --network network-cass cassandra |

Here, docker network create network-cass creates a network, and the docker run command initializes a Cassandra container within this network.

In order to access Cassandra command-line, we need to execute the following command:

|

1 |

docker exec -it cassandra-node cqlsh |

Step 3: Create CQL script

To define and insert data, create a file named data.cql. This file will contain a CQL script for creating a keyspace, defining a table, and adding sample data.

Example data.cql content:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

CREATE KEYSPACE IF NOT EXISTS library WITH REPLICATION = { 'class' : 'SimpleStrategy', 'replication_factor' : '1' }; CREATE TABLE IF NOT EXISTS library.books ( book_id text PRIMARY KEY, title text, author text, published_date timestamp ); INSERT INTO library.books (book_id, title, author, published_date) VALUES ('b001', 'Data Structures in Practice', 'Jane Doe', toTimestamp(now())); INSERT INTO library.books (book_id, title, author, published_date) VALUES ('b002', 'Understanding Algorithms', 'John Smith', toTimestamp(now())); |

In this script:

- Keyspace Creation:

CREATE KEYSPACElibrary creates a library namespace, storing data with one replica. - Table Creation:

CREATE TABLEbooks defines a table books with columns for book_id, title, author, and published_date. - Data Insertion:

INSERT INTOadds rows for two books with details like ID, title, author, and the current timestamp for published_date.

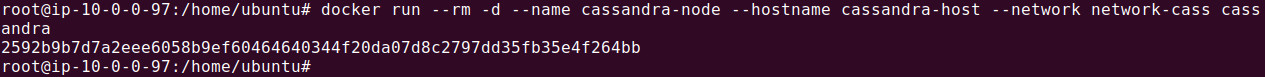

Step 4: Load data with CQLSH

Use cqlsh, the Cassandra shell, to load the data.cql script into the database. Run the following command to copy data.cql to the container’s /tmp/ folder:

|

1 |

docker cp data.cql cassandra-node:/tmp/data.cql |

This command loads data.cql from docker container’s /tmp/ folder into Cassandra and inserts the initial data:

|

1 |

docker exec -i cassandra-node cqlsh -f /tmp/data.cql |

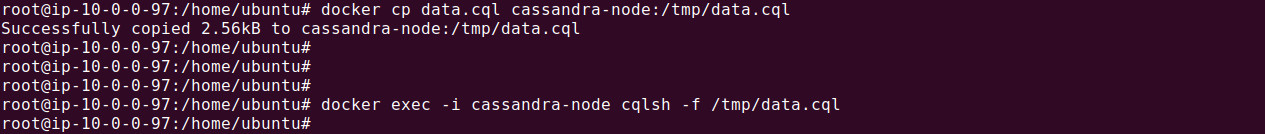

Step 5: Interactive CQLSH session

To start an interactive CQL session with Cassandra, use the following command:

|

1 2 |

docker run --rm -it --network cassandra_network \ nuvo/docker-cqlsh cqlsh cassandra 10042--cqlversion='3.4.6' |

Note: In the above example, we are running Cassandra at a non-default port, 10042.

Once connected, there should be a cqlsh> prompt where developers can run CQL commands interactively.

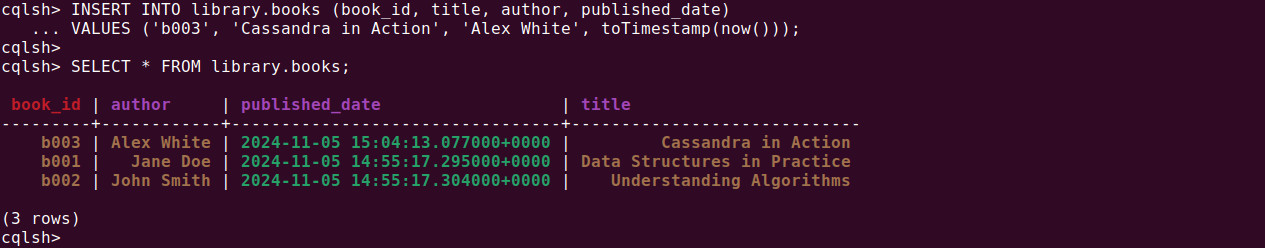

Step 6: Read data

To view data in the books table, use a SELECT statement in cqlsh:

|

1 |

SELECT * FROM library.books; |

This command retrieves all rows in the books table, displaying book_id, title, author, and published_date.

Step 7: Insert additional data

To add more entries to the books table, use an INSERT statement:

|

1 2 |

INSERT INTO library.books (book_id, title, author, published_date) VALUES ('b003', 'Cassandra in Action', 'Alex White', toTimestamp(now())); |

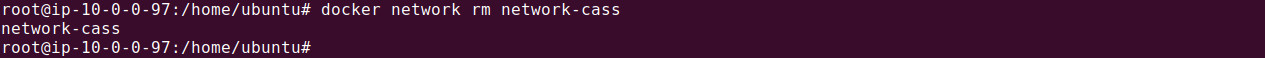

Step 8: Clean Up

After testing, stop and remove the Cassandra container and network:

|

1 |

docker kill cassandra-node |

|

1 |

docker network rm network-cass |

NetApp Instaclustr: Simplifying Apache Cassandra deployment and management

Apache Cassandra has emerged as a popular choice for managing large-scale, distributed databases due to its scalability, fault tolerance, and high performance. However, deploying and managing Cassandra clusters can be complex and time-consuming. That’s where NetApp Instaclustr comes in. NetApp Instaclustr simplifies Apache Cassandra deployment and management, empowering organizations to leverage the full potential of this powerful NoSQL database.

NetApp Instaclustr takes the hassle out of deploying Apache Cassandra clusters by providing a streamlined and automated process. With just a few clicks, organizations can create and configure Cassandra clusters in the cloud, eliminating the need for manual setup and reducing deployment time significantly. This allows developers to focus on their applications rather than spending valuable time on infrastructure management.

NetApp Instaclustr handles the underlying infrastructure for Apache Cassandra, ensuring high availability, fault tolerance, and data replication. It takes care of tasks such as cluster provisioning, software updates, and security patches, relieving organizations of the operational burden. This managed infrastructure approach allows businesses to scale their Cassandra clusters seamlessly and focus on building robust and scalable applications.

One of the key advantages of Apache Cassandra is its ability to scale horizontally to handle massive workloads. NetApp Instaclustr fully leverages this capability by providing seamless scalability for Cassandra clusters. As data volumes grow, organizations can easily add or remove nodes to meet their changing needs, ensuring optimal performance and responsiveness. This scalability empowers businesses to handle increasing data demands without sacrificing performance.

NetApp Instaclustr places a strong emphasis on data security and compliance. It offers robust security features, including encryption at rest and in transit, role-based access control, and integration with various identity providers. This ensures that sensitive data stored in Apache Cassandra remains protected from unauthorized access. Additionally, NetApp Instaclustr helps organizations meet regulatory compliance requirements by providing audit logs and facilitating data governance.

NetApp Instaclustr provides comprehensive monitoring and support services for Apache Cassandra clusters. It offers real-time monitoring capabilities, allowing organizations to gain insights into cluster performance, resource utilization, and potential bottlenecks. In case of any issues or failures, NetApp Instaclustr’s support team is readily available to provide assistance and ensure minimal downtime.

Managing and maintaining Apache Cassandra clusters can be costly, especially when it comes to infrastructure and operational expenses. NetApp Instaclustr helps optimize costs by offering flexible pricing models. Organizations can choose from pay-as-you-go options, allowing them to scale resources based on demand and pay only for what they use. This eliminates the need for upfront investments in infrastructure and provides cost predictability.

For more information: