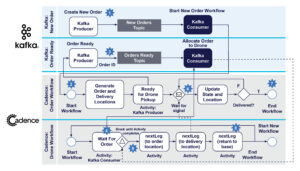

In this blog, we continue with our Cadence Drone Delivery application – with a summary of the complete workflow and the Cadence+Kafka integration patterns. We’ll also take look at some of the new Cadence features we used (retries, continue as new, queries, side-effects), and reveal an example trace of a drone delivery.

1. Movement and Delivery

(Source: Shutterstock)

The details of Drone movement were explained in the previous blog, but to summarise, the Drone Workflow is responsible for “moving” from the base to the order location, pickup up the order, flying to the delivery location, dropping the delivery, and returning to base. This is done with the nextLeg() activity, and if it has picked up the order, but hasn’t delivered it yet, it sends location state change signals to the Order Workflow (6). An interesting extension could be to update an ETA in the Order Workflow. Once the drone returns to base it “checks” the delivery and signals the Order Workflow that it’s complete, resulting in the Order Workflow completing (7), and the Drone Workflow starting a new instance of itself (8).

2. Summary of Cadence+Kafka Integration Patterns

So now we’ve seen several examples of different ways that Cadence and Kafka can integrate, including some from our previous blog. We’ll summarize them in a table for ease of reference.

| Pattern Number | Pattern Name | Direction | Purpose | Features | Examples |

| 1 | Send a message to Kafka | Cadence → Kafka | Send a single message to Kafka topic, simple notification (no response) | Wraps a Kafka Producer in a Cadence Activity | Blog 2, Blog 4 |

| 2 | Request/ response from Cadence to Kafka | Cadence → Kafka → Cadence | Reuse Kafka microservice from Cadence, request and response to/from Kafka | Wraps a Kafka Producer in a Cadence Activity to send message and header meta-data for reply; Kafka consumer uses meta-data to signal Cadence Workflow or Activity with result to continue | Blog 2 |

| 3 | Start New Cadence Workflow from Kafka | Kafka → Cadence | Start Cadence Workflow from Kafka Consumer in response to a new record being received | Kafka Consumer starts Cadence Workflow instance running, one instance per record received | Blog 4 |

| 4 | Get the next job from a queue | Cadence → Kafka → Cadence | Each workflow instance gets a single job from a Kafka topic to process | Cadence Activity wraps a Kafka Consumer which continually polls the Kafka topic, returns a single record only, transient for the duration of activity only | Blog 4 |

3. New Cadence Features Used

In the Drone Delivery Demo application, we’ve snuck in a few new Cadence features, so I’ll complete this blog by highlighting them.

3.1 Cadence Retries

(Source: Shutterstock)

Cadence Activities and Workflows can fail for a variety of reasons, including timeouts. You can either handle retries manually or get the Cadence server to retry for you, which is easier. The Cadence documentation on retries is here and here. For my Drone Delivery application, I decided to use retries for my activities. This is because they are potentially “long” running, and if they fail they can be resumed and continue from where they left off. The waitForOrder() activity is a blocking activity that only returns when it has acquired an Order that needs delivery, so it can potentially take an indefinite period of time. It’s also idempotent, so calling it multiple times works fine (as we saw above, it’s actually a Kafka consumer polling a topic). And the nextLeg()activity is responsible for plotting the Drone’s progress from one location to another, so can take many minutes to complete. If it fails, we want it to resume, but from the current location (the latest version now uses a query method to get the current drone location at the start of the activity). The easiest way to set the retry options is with a @MethodRetry annotation in the Activities interface like this example. Getting the settings including the times right may be challenging, however, and I’ve only “guessed” these:

|

1 2 |

@MethodRetry(maximumAttempts = 3, initialIntervalSeconds = 1, expirationSeconds = 300, maximumIntervalSeconds = 30) void nextLeg(LatLon start, LatLon end, boolean updateOrderLocation, String orderID)<span style="font-weight: 400;">;</span> |

- maximumAttempts is the maximum number of retries permitted before actual failure

- initialIntervalSeconds is the delay until first retry

- expirationSeconds is the maximum number of seconds for all the retry attempts.

- Retries stop whenever maximumAttempts or expirationSeconds is reached (and only one is required)

- maximumIntervalSeconds is the cap of the interval between retries, an exponential backoff strategy is used and this caps the interval rather than letting it increase too much

3.2 Cadence Continue As New

You may be tempted to keep a Cadence workflow running forever—after all, they are designed for long-running processes. However, this is generally regarded as a “bad thing” as the size of the workflow state will keep on increasing, possibly exceeding the maximum state size, and slowing down recomputing the current state of workflows from the state history. The simple solution that I used is to start a new Drone workflow at the end of the existing workflow, using Workflow.continueAsNew(), which starts a new workflow instance with the same workflow ID, but none of the states, as the original. This means that Drone Workflows start at the base, are fully charged, and don’t have an Order to pick up yet.

3.3 Cadence Queries

In the 2nd Cadence blog, we discovered that workflows must define an interface class, and the interface methods can have annotations including @WorkflowMethod (the entry point to a workflow, exactly one method must have this), and @SignalMethod (a method that reacts to external signals, zero or more methods can have this annotation).

However, there’s another annotation that we didn’t use, @QueryMethod. This annotation indicates a method that reacts to synchronous query requests, and you can have lots of them. They are designed to expose state to other workflows, etc., so they are basically a “getter” method. For example, here’s the full list of methods, including some query methods, for the OrderWorkflow:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

public interface OrderWorkflow { @WorkflowMethod(executionStartToCloseTimeoutSeconds = (int)maxFlightTime, taskList = orderActivityName) String startWorkflow(String name); @SignalMethod void updateGPSLocation(LatLon l); @SignalMethod void signalOrder(String msg); @SignalMethod void updateLocation(String loc); @QueryMethod String getState(); @QueryMethod LatLon getOrderLocation(); @QueryMethod LatLon getDeliveryLocation(); } |

Queries must be read-only and non-blocking. One major difference between signals and queries that I noticed is that signals only work on non-completed workflows, but queries work on both non-completed and completed workflows. For completed workflows, the workflows are automatically restarted so that their end state can be returned. Clever!

3.4 Cadence Side Effects

Cadence Workflow code must in general be deterministic

(Source: Shutterstock)

A final trick that I used for this application was due to my use of random numbers to compute Order and Delivery locations, in the newDestination() function. In order to use this correctly in the Order Activities Workflow I had to tell Cadence that it has side effects using the Workflow.sideEffect() method. This allows workflows to execute the provided function once and records the result into the workflow history. The recorded history result will be returned without executing the provided function during replay. This guarantees the deterministic requirement for workflows, as the exact same result will be returned in the replay. Here’s my example from the startWorkflow() method of the Order workflow (above):

|

1 2 3 |

startLocation = Workflow.sideEffect(LatLon.class, () -> DroneMaths.newDestination(baseLocation, 0.1, maxLegDistance)); deliveryLocation = Workflow.sideEffect(LatLon.class, () -> DroneMaths.newDestination(startLocation, 0.1, maxLegDistance)); |

The code is available in our Github repository. In the next blog in the series we’ll explore Cadence scalability and see how many Drones we can get flying at once.

Appendix: Example Trace

If all goes according to plan a typical (abbreviated) Drone and Order Workflow Trace looks like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 |

Order WF readyForDelivery activity order_0 id 939328c5-eaad-4f52-9aaf-08100fde1e84 waitForOrder got an order! topic = orderjobs2, partition = 0, offset = 17, key = , value = 939328c5-eaad-4f52-9aaf-08100fde1e84 Drone Drone_0 got an order from Kafka + 939328c5-eaad-4f52-9aaf-08100fde1e84 Drone Drone_0 has got order 939328c5-eaad-4f52-9aaf-08100fde1e84 Drone Drone_0: new state = gotOrder, location = base order order_0 got signal = droneHasOrder Drone Drone_0 has generated a flight plan based on Order and Delivery locations Start lat -35.20586, lon 149.09462 Order lat -35.189085007724415, lon 149.09190627324634 Delivery lat -35.1849320141879, lon 149.08653974053584 End lat -35.20586, lon 149.09462 Drone Drone_0 flight plan total distance (km) = 4.9930887486783835 Drone Drone_0 estimated total flight time (h) = 0.24965443743391919 Drone Drone_0 distance to order (km) = 1.881431511294941 Drone Drone_0 estimated time until order pickup (h) = 0.09407157556474705 Drone Drone_0 distance to delivery (km) = 2.5530367293280447 Drone Drone_0 estimated time until delivery (h) = 0.12765183646640224 Drone Drone_0: new state = flightPlanGenerated, location = base Drone + Drone_0 flying to pickup Order Drone Drone_0: new state = flyingToOrder, location = betweenBaseAndOrder order order_0 got signal = droneOnWayForPickup Drone WF gpsLocation = lat -35.20586, lon 149.09462 start loc = lat -35.20586, lon 149.09462 Drone flew to new location = lat -35.20536523834301, lon 149.09453994515312 Distance to destination = 1.825940473393331 km Drone Drone_0 gps location update lat -35.20536523834301, lon 149.09453994515312 Drone Drone_0 charge now = 99.44444444444444%, last used = 0.5555555555555556 Drone WF gpsLocation = lat -35.20536523834301, lon 149.09453994515312 start loc = lat -35.20586, lon 149.09462 Drone flew to new location = lat -35.20487047663333, lon 149.09445989128173 Distance to destination = 1.770449431350198 km Drone Drone_0 gps location update lat -35.20487047663333, lon 149.09445989128173 Drone flew to new location = lat -35.204375714870956, lon 149.0943798383858 Distance to destination = 1.714958384759105 km Drone Drone_0 charge now = 98.88888888888889%, last used = 0.5555555555555556 ... Drone flew to new location = lat -35.189085007724415, lon 149.09190627324634 Distance to destination = 0.0 km Drone Drone_0 gps location update lat -35.189085007724415, lon 149.09190627324634 Drone arrived at destination. Drone Drone_0 charge now = 81.11111111111106%, last used = 0.5555555555555556 Drone Drone_0 picking up Order Drone Drone_0: new state = pickingUpOrder, location = orderLocation Drone Drone_0 picking up Order! Drone Drone_0 picked up Order! order order_0 got signal = pickedUpByDrone Drone Drone_0 charge now = 77.77777777777773%, last used = 3.3333333333333335 Drone Drone_0 delivering Order... Drone Drone_0: new state = startedDelivery, location = betweenOrderAndDelivery Order new location = orderLocation order order_0 got signal = outForDelivery Order new location = onWay Drone WF gpsLocation = lat -35.189085007724415, lon 149.09190627324634 start loc = lat -35.189085007724415, lon 149.09190627324634 Drone flew to new location = lat -35.188741877698526, lon 149.09146284539662 Distance to destination = 0.6161141689892213 km Drone Drone_0 gps location update lat -35.188741877698526, lon 149.09146284539662 Drone Drone_0 charge now = 77.22222222222217%, last used = 0.5555555555555556 Drone flew to new location = lat -35.18839874605641, lon 149.09101942129195 Distance to destination = 0.5606231205354245 km Order GPS Location lat -35.188741877698526, lon 149.09146284539662 ... Drone flew to new location = lat -35.1849320141879, lon 149.08653974053584 Distance to destination = 0.0 km Drone Drone_0 gps location update lat -35.1849320141879, lon 149.08653974053584 Drone Drone_0 charge now = 70.55555555555549%, last used = 0.5555555555555556 Drone arrived at destination. Order GPS Location lat -35.1849320141879, lon 149.08653974053584 Drone + Drone_0 dropping Order! Drone Drone_0: new state = droppingOrder, location = deliveryLocation Drone + Drone_0 dropped Order! Order new location = deliveryLocation Drone Drone_0 charge now = 67.22222222222216%, last used = 3.3333333333333335 Drone Drone_0 returning to Base Drone Drone_0: new state = returningToBase, location = betweenDeliveryAndBase order order_0 got signal = delivered Drone WF gpsLocation = lat -35.1849320141879, lon 149.08653974053584 start loc = lat -35.1849320141879, lon 149.08653974053584 Drone flew to new location = lat -35.1854079590788, lon 149.08672345348626 Distance to destination = 2.384560977495208 km Drone Drone_0 gps location update lat -35.1854079590788, lon 149.08672345348626 Drone flew to new location = lat -35.18588390369231, lon 149.08690716858857 Distance to destination = 2.3290699362110967 km Drone Drone_0 charge now = 66.6666666666666%, last used = 0.5555555555555556 ... Drone flew to new location = lat -35.20586, lon 149.09462 Distance to destination = 0.0 km Drone Drone_0 charge now = 43.3333333333332%, last used = 0.5555555555555556 Drone Drone_0 gps location update lat -35.20586, lon 149.09462 Drone arrived at destination. Drone Drone_0 charge now = 42.777777777777644%, last used = 0.5555555555555556 Drone + Drone_0 returned to Base! Drone Drone_0: new state = backAtBase, location = base Drone Drone_0: new state = checkOrder, location = base Drone Drone_0: new state = droneDeliveryCompleted, location = base Drone Drone_0: new state = charging, location = base Drone Drone_0 charging! charging time = 515s Drone Drone_0: new state = charged, location = base order order_0 got signal = orderComplete Order WF exiting! |

Follow the series: Spinning Your Drones With Cadence

Part 1: Spinning Your Workflows With Cadence!

Part 2: Spinning Apache Kafka® Microservices With Cadence Workflows

Part 3: Spinning your drones with Cadence

Part 4: Architecture, Order and Delivery Workflows