In this next installment of the Cadence Drone demonstration application blog series, we have come up with a “New and Improved” Cadence+Kafka integration pattern, and push the boundaries of Cadence scalability to discover how many drones we can fly at once.

1. A New and Improved Drone+Order Matching Service

Catching a cab can be difficult in New York! (Source: Shutterstock)

Whenever I see “New and Improved” product claims, I often wonder what was previously wrong with them (after all, why do they need improving if they weren’t broken!) Consistent with this theory, it turns out that there was something “broken” with my previous Cadence Drone Delivery demo application code.

In a previous blog (section 4: Drone Gets Next Order for Delivery) we explained one of the Cadence+Kafka integration patterns, which was designed to enable drones to pick up an order that is ready for delivery. We used a simple queue approach, with a single Kafka consumer tightly coupled with each Drone Workflow as follows. The Drone Workflow has a Wait For Order Activity. This wraps a Kafka consumer, which actually runs in the Cadence activity thread, so it is transient, lasting only as long as the activity runs. It polls the Orders Ready topic until a single order is returned, resulting in the activity completing and the drone proceeding with the delivery.

This approach had the virtue of simplicity, but it has a potential problem. Because the Kafka consumer is regularly being created and destroyed, there will be constant consumer rebalancing overhead (see this blog for more information about rebalancing storms). This wasn’t a practical problem up to about 100 concurrent drones, but after embarking on some more serious load testing for this blog, I noticed that there were substantial delays in exactly this part of the workflow, with sometimes 10s of seconds elapsed time between a drone being ready and an order being acquired by it, using this mechanism. So, it was time for a new and improved “matching service”.

(Source: Shutterstock)

To improve the performance, I introduced a “dispatching service”, similar to what you see at busy airport taxi ranks, where a dispatcher coordinates the passengers and taxis, by directing passengers to specific taxi bays to wait for the next available taxi. I guess ride-share apps achieve a similar result these days.

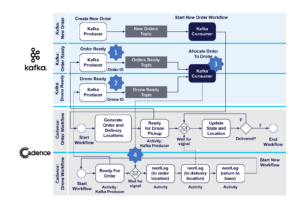

Here’s how the new matching service works. As before, (1) orders that are ready for delivery are put onto the Order Ready topic by an Order Workflow. We now add a new Kafka topic, the Drone Ready topic. When a drone is ready for an order, it now sends a message to the Drone Ready topic using a Kafka producer (2)—this message contains the Drone ID—and waits for a signal. There’s also (3) a new permanent Kafka consumer (“Allocate Order To Drone”) that reads from both the Orders Ready and the Drone Ready topics. This consumer runs independently and continuously outside of the Cadence workflows, preventing the previous rebalancing problems. When the consumer gets a message from both topics it sends a signal containing the Order ID to the correct Drone workflow (4) which tells the drone which order it is now responsible for delivering and kicking off the delivery part of the workflow. See the following updated diagram.

The Cadence Drone demonstration code has been updated, and there’s a new class for the new Kafka consumer, MatchOrderToDrone.java.

2. Cadence Scalability Experiments

2.1 Cluster Details

Cadence is designed for scalable high throughput workflow execution, so now let’s see how many drones we can actually fly! Of course, scalability depends on the hardware and other infrastructure available, so for these experiments, I provisioned a production-ready Instaclustr managed Cadence service (on AWS) with the following resources:

Cadence cluster: 3 nodes of CAD-PRD-m5ad.large-75 instances (2 VCPUs) = 6 VCPUs

Cassandra cluster: 9 nodes of m5l-250-v2 instances (2 VCPUs) = 18 VCPUs

Total cluster cores = 24

I also spun up an EC2 instance to run the Cadence client and worker code (8 VCPUs). So, 24+8 = 32 VCPUs in total. The ratio of resources across the end-to-end system (Client:Cadence:Cassandra) was therefore 1:1:3.

Note that I initially tried 3 and 6 node Cassandra clusters, but they were obviously a bottleneck, so our Cadence Ops gurus suggested increasing the number of nodes to 9 (i.e. 3 x the number of Cadence nodes), which is apparently the recommended ratio of Cadence to Cassandra resources of 1:3.

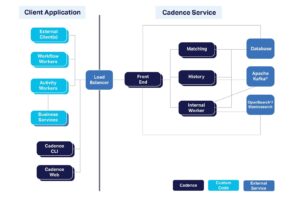

The Cadence client was connected to the managed Cadence cluster via a load balancer to assist with balanced Cadence cluster node utilization (on AWS we automatically provision one of these).

Here’s an overview of the various components making up a complete Cadence system. Apache Kafka and OpenSearch are optional, but provide useful “advanced visibility” into running Cadence workflows:

2.2. Experiment One: Flat Out

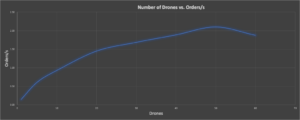

My Cadence Drone Delivery demonstration application wasn’t specifically designed to be a benchmark, so I used a few tricks to be able to get some benchmark results. For my first attempt, I decided to change the drone flying speed to 100km/h, and run the drones flat-out, (i.e. faster than real time, with no wait times between events). This meant that I could run a large number of benchmark tests in a small amount of time, increasing the number of concurrent drone workflows until the maximum cluster capacity was reached. I also added a “benchmarking” mode to the code which forced the delivery distances to be identical (just the maximum allowed) for every delivery (otherwise there was potentially too much variation for repeatable benchmarking). Also, the number of orders was ten times the number of drones. To measure the drone workflow throughput, I simply measure the elapsed time of each run (from logs), and divided total orders by total time to give completed orders per second. Here’s a graph of the number of concurrent drones vs. orders/s, which clearly shows the throughput increasing, reaching a peak at 50 concurrent drone workflows, then dropping off. Cadence cluster CPU utilization was between 53-93% for the 50-drone result (i.e. some nodes were more heavily utilized than others).

Concurrent Drones vs. Orders/s, showing a peak of 50 Drones

2.3. Experiment Two: Real Time

For my second experiment I wanted to see how many drones I could fly in “real time”—that is, if they were taking the actual time for flying, a maximum of 30 minutes per delivery (with 10s wait times between each movement, pickups and drop-offs taking 60s, and charging taking time as well). To achieve the benchmarking for this experiment, I used modified code that ramped up the number of drones slowly. Why? Because there’s actually significant overhead in creating Cadence workflows, which meant that I had to leave sufficient time after workflow creation before giving the drones orders to deliver, to ensure that there were sufficient Cadence cluster resources available for normal workflow execution. And the final results were…