Overview

Originally developed and open sourced by Uber, Cadence® is a workflow engine that greatly simplifies microservices orchestration for executing complex business processes at scale.

Instaclustr has offered Cadence on the Instaclustr Managed Platform for more than 12 months now. Having made open source contributions to Cadence to allow it to work with Apache Cassandra® 4, we were keen to test that performance with the new features of Cassandra 4.1 with Cadence.

Paxos is the consensus protocol used in Cassandra to implement lightweight transactions (LWT) that can handle concurrent operations. However, the initial version of Paxos—Paxos v1—achieves linearizable consistency at the high cost of 4 round-trips for write operations. It could potentially affect Cadence performance considering Cadence’s reliance on LWT for executing multi-row single shard conditional writes to Cassandra according to this documentation.

With the release of Cassandra 4.1, Paxos v2 was introduced, promising a 50% improvement in LWT performance, reduced latency, and a reduction in the number of roundtrips needed for consensus, as per the release notes. Consequently, we wanted to test the impact on Cadence’s performance with the introduction of Cassandra Paxos v2.

This blog post focuses on benchmarking the performance impact of Cassandra Paxos v2 on Cadence, with a primary performance metric being the rate of successful workflow executions per second.

Benchmarking Setup

We used the cadence-bench tool to generate bench loads on the Cadence test clusters; to reduce variables in benchmarking, we only use the basic loads that don’t require the Advanced Visibility feature.

Cadence bench tests require a Cadence server and bench workers. The following are their setups in this benchmarking:

Cadence Server

At Instaclustr, a managed Cadence cluster depends on a managed Cassandra cluster as the persistence layer. The test Cadence and Cassandra clusters were provisioned in their own VPCs and used VPC Peering for inter-cluster communication. For comparison, we provisioned 2 sets of Cadence and Cassandra clusters—Baseline set and Paxos v2 set.

- Baseline set: A Cadence cluster dependent on a Cassandra cluster with the default Paxos v1

- Paxos v2 set: A Cadence cluster dependent on a Cassandra cluster with Paxos upgraded to v2

We provisioned the test clusters in the following configurations:

| Application | Version | Node Size | Number of Nodes |

| Cadence | 1.2.2 | CAD-PRD-m5ad.xlarge-150 (4 CPU cores + 16 GiB RAM) | 3 |

| Cassandra | 4.1.3 | CAS-PRD-r6g.xlarge-400 (4 CPU cores + 32 GiB RAM) | 3 |

Bench Workers

We used AWS EC2 instances as the stressor boxes to run bench workers that generate bench loads on the Cadence clusters. The stressor boxes were provisioned in the VPC of the corresponding Cadence cluster to reduce network latency between the Cadence server and bench workers. On each stressor box, we ran 20 bench worker processes.

These are the configurations of the EC2 instances used in this benchmarking:

| EC2 Instance Size | Number of Instances |

| c4.xlarge (4 CPU cores + 7.5 GiB RAM) | 3 |

Upgrade Paxos

For the test Cassandra cluster in the Paxos v2 set, we upgraded the Paxos to v2 after the cluster hit running.

According to the section Steps for upgrading Paxos in NEWS.txt, we set 2 configuration properties and ensured Paxos repairs were running regularly to upgrade Paxos to v2 on a Cassandra cluster. We also planned to change the consistency level used for LWT in Cadence to fully benefit from Paxos v2 improvement.

We took the following actions to upgrade Paxos on the test Paxos v2 Cassandra cluster:

Added these configuration properties to the Cassandra configuration file

|

1 |

cassandra.yaml. |

| Configuration Property | Value |

| paxos_variant | v2 |

| paxos_state_purging | repaired |

For Instaclustr managed Cassandra clusters, we use an automated service on Cassandra nodes to run Cassandra repairs regularly.

Cadence sets LOCAL_SERIAL as the consistency level for conditional writes (i.e., LWT) to Cassandra as specified here. Because it’s hard-coded, we could not change it to ANY or LOCAL_QUORUM as suggested in the Steps for upgrading Paxos.

The Baseline Cassandra cluster was set to use the default Paxos v1 so no change to configurations were required.

Bench Loads

We used the following configurations for the basic bench loads to be generated on both the Baseline and Paxos v2 Cadence clusters:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

{ "useBasicVisibilityValidation": true, "contextTimeoutInSeconds": 3, "failureThreshold": 0.01, "totalLaunchCount": 100000, "routineCount": 15 or 20 or 25, "waitTimeBufferInSeconds": 300, "chainSequence": 12, "concurrentCount": 1, "payloadSizeBytes": 1024, "executionStartToCloseTimeoutInSeconds": 300 } |

All the configuration properties except routineCount were kept constant. routineCount defines the number of in-parallel launch activities that start the stress workflows. Namely, it affects the rate of generating concurrent test workflows, and hence can be used to test Cadence’s capability to handle concurrent workflows.

We ran 3 sets of bench loads with different routineCounts (i.e., 15, 20, and 25) in this benchmarking so we could observe the impacts of routineCount on Cadence performance.

We used cron jobs on the stressor boxes to automatically trigger bench loads with different routineCount on the following schedules:

- Bench load 1 with routineCount=15: Runs at 0:00, 4:00, 8:00, 12:00, 16:00, 20:00

- Bench load 2 with routineCount=20: Runs at 1:15, 5:15, 9:15, 13:15, 17:15, 21:15

- Bench load 3 with routineCount=25: Runs at 2:30, 6:30, 10:30, 14:30, 18:30, 22:30

Results

In the beginning of the benchmarking, we observed the aging impact on Cadence performance. Specifically, the key metric—Average Workflow Success/Sec—gradually decreased for both Baseline and Paxos v2 Cadence clusters as more bench loads were run.

Why did this occur? Most likely because Cassandra uses in-memory cache when there’s only a small amount of data stored in it, meaning read and write latency are lower when all data lives in memory. After more rounds of bench loads were executed, Cadence performance became more stable and consistent.

Cadence Workflow Success/Sec

Cadence Average Workflow Success/Sec is the key metric we use to measure Cadence performance.

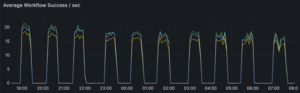

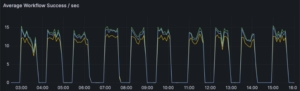

As demonstrated in the graphs below and contrary to what we expected, Baseline Cadence cluster achieved around 10% higher Average Workflow Success/Sec than Paxos v2 Cadence cluster in the sample of 10 rounds of bench loads.

Baseline Cadence cluster successfully processed 36.2 workflow executions/sec on average:

Paxos v2 Cadence cluster successfully processed 32.9 workflow executions/sec on average:

Cadence History Latency

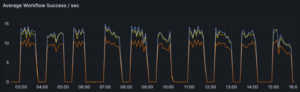

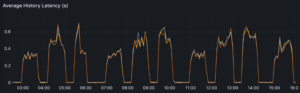

Cadence history service is the internal service that communicates with Cassandra to read and write workflow data in the persistence store. Therefore, Average History Latency should in theory be reduced if Paxos v2 truly benefits Cadence performance.

However, as shown below, Paxos v2 Cadence cluster did not consistently perform better than Baseline Cadence cluster on this metric:

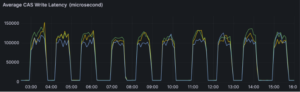

Baseline Cadence cluster

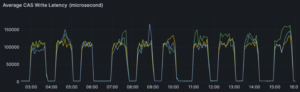

Paxos v2 Cadence cluster

Cassandra CAS Operations Latency

Cadence only uses LWT for conditional writes (LWT is also called Compare and Set [CAS] operation in Cassandra). Measuring the latency of CAS operations reported by Cassandra clusters should reveal if Paxos v2 reduces latency of LWT for Cadence.

As indicated in the graphs below and by the mean value of this metric, we did not observe consistently significant differences in Average CAS Write Latency between Baseline and Paxos v2 Cassandra clusters.

The mean value of Average CAS Write Latency reported by Baseline Cassandra cluster is 105.007 milliseconds.

The mean value of Average CAS Write Latency reported by Paxos v2 Cassandra cluster is 107.851 milliseconds.

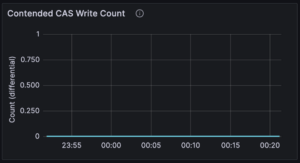

Cassandra Contended CAS Write Count

One of the improvements introduced by Paxos v2 is reduced contention. Therefore, to ensure that Paxos v2 was truly running on Paxos v2 Cassandra cluster, we measured and compared the metric Contended CAS Write Count for Baseline and Paxos v2 Cassandra clusters. As clearly illustrated in the graphs below, Baseline Cassandra cluster experienced contended CAS writes while Paxos v2 Cassandra cluster did not, which means Paxos v2 was in effect on Paxos v2 Cassandra.

Baseline Cassandra cluster

Paxos v2 Cassandra cluster

Conclusion

During our benchmarking test, there was no noticeable improvement in the performance of Cadence attributable to Cassandra Paxos v2.

This may be because we were unable to modify the consistency level in the Lightweight Transactions (LWT) as required to fully leverage Cassandra Paxos v2, or may simply be that other factors in a complex, distributed application like Cadence mask any improvement from Paxos v2.

Future Work

Although we did not see promising results in this benchmarking, future investigations could focus on assessing the latency of LWTs in Cassandra clusters with Paxos v2 both enabled and disabled and with a less complex client application. Such exploration would be valuable for directly evaluating the impact of Paxos v2 on Cassandra’s performance.