In two recent blogs, I investigated the new Kafka Tiered Storage architecture which enables Kafka to stream more (essentially unlimited data) for less cost (by storing more older records on cloud-native storage).

In Part 1 I explored how local vs. remote storage works in theory, and in Part 2 I revealed some performance results and my conclusion that Kafka tiered storage is more like a (pumped hydro) dam (or a write-through cache) than a tiered fountain.

Now we’re taking a broader look at why and when more and older data motivates potential Kafka tiered storage use cases. In Part 3, we explored Kafka time and space, Kafka time travel, Kafka consumer seeking (and how that relates to delivery semantics), Kafka infinite streams, and encountered different time scales.

In this latest part, we’ll explore the impact of various consumer behaviors on the amount and age of data processed. Starting with real-time processing, we then move on to slow consumers, Kafka as a buffer, disconnected consumers, Kafka record replaying and reprocessing use cases, new Kafka sink/downstream systems, and end up on a new colony planet with replicating/migrating Kafka clusters.

Let’s now look at the motivation for some different use cases and consumer behaviors across increasing temporal scales. These also have some aspect of time travel, or more specifically, time dilation—none (real-time), slow-consumers (time slowing down, or processing at a slower rate than production), and potentially time speeding up (for replaying/reprocessing use cases, the consumers can process historic data faster than the original time-frame, and potentially catch up with the “now” events!).

Real-time processing

In Formula One racing, cars are often separated by the blink of an eye, so every millisecond (or is that decisecond?!) is critical! (Source: Adobe Stock)

Apache Kafka was designed for:

- Speed (low ms, but near or soft real-time with no guarantees vs. hard real-time systems which have guaranteed upper processing times)

- High throughput stream data processing

- In-order processing of multiple “events” with timestamps.

Events are produced by producers, stored in Kafka brokers (multiple brokers for redundancy and scalability), and consumed by consumers (multiple concurrency consumers in potentially multiple groups for replication).

This is a major use case of Kafka and doesn’t necessarily require long-term storage of records on brokers. Time is essentially always in the present, with no intentional slowing down or speeding up.

However, there are other potential causes (slow consumers) and a Kafka superpower (Kafka as a buffer) that naturally tend to increase the amount of time that records take to be processed and therefore need to be persisted for.

Slow consumers

Slow vehicles that block the road are a problem. (Source: Getty Images)

The default Kafka consumer is single-threaded, so can only process one record at a time. You therefore want each record to be processed as fast as possible so you can get onto the next one.

However, slow consumers can take longer to process records—either due to the type or amount of processing required, the type or size of records, latency introduced by downstream systems, etc., or due to intermittent problems (e.g. unavailability or slowing down of downstream systems etc.), including consumer failures and consumer group rebalancing.

Slow consumers can introduce delays of seconds to minutes, requiring records to be stored for at least this long before they are processed. This is the first example of Kafka time dilation—the records are processed slower than real-time, so time slows down.

However, you can have as many concurrent consumers in each consumer group as there are topic partitions, or you can try out the multi-threaded Kafka Parallel Consumer (see Part 1 and Part 2 of my blog series on it) which provides higher concurrency within partitions (ordered by partition, key, or unordered), and may reduce the processing delay.

Kafka as a buffer

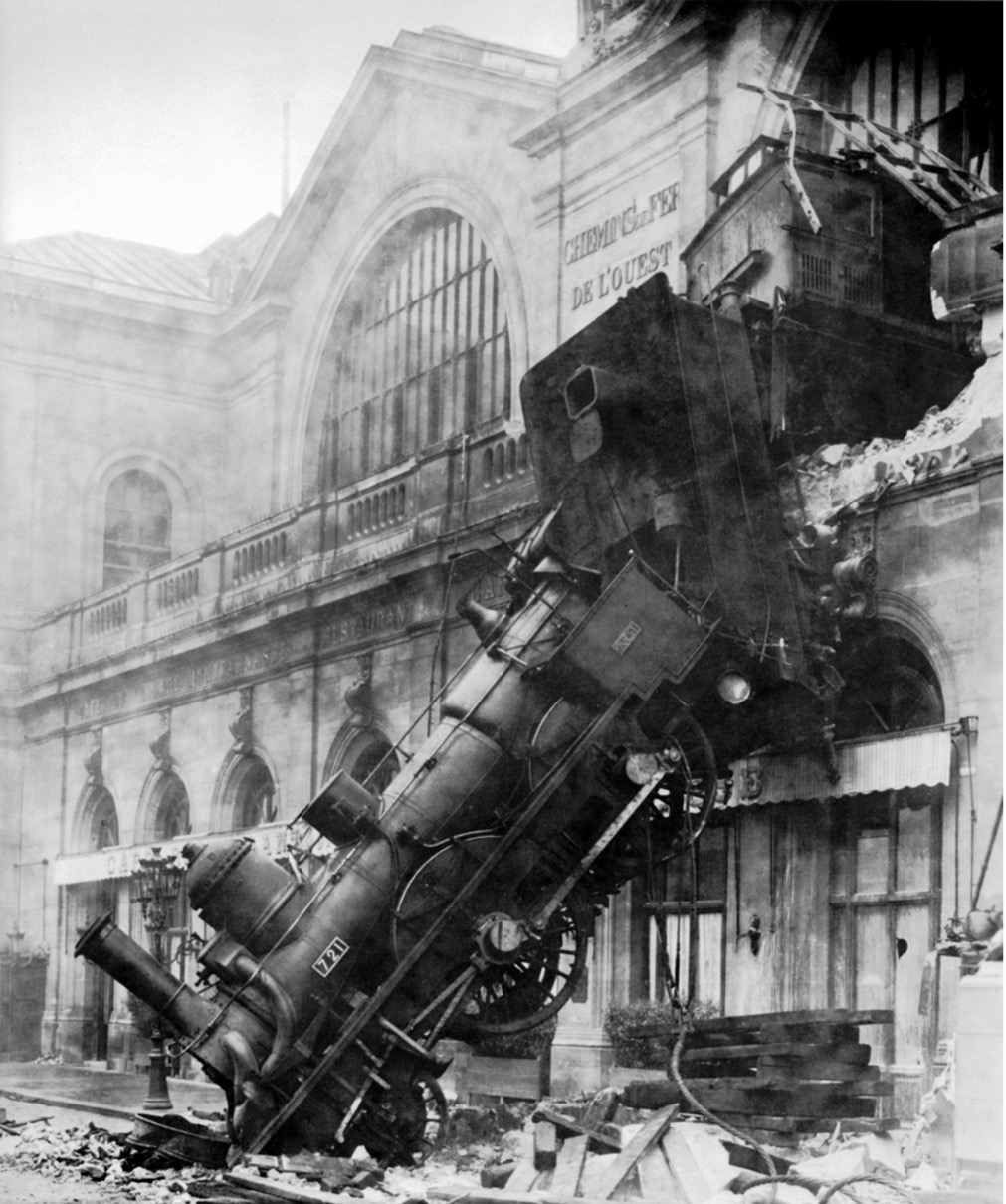

This train needed a bigger buffer! (Source: Wikipedia)

Kafka has a well-known superpower: it can absorb unexpected intermittent write load spikes for short periods of time, ensuring that messages are not lost, and that downstream processing systems are not overloaded. This introduces delays in processing, but no failures. Messages are eventually processed, and consumers can eventually catch up with and process the newest messages.

This is the first use case that requires significantly longer-term message persistence—potentially minutes to hours. However, many traditional pub-sub/message brokers also had this capability (durable messages, although this was mainly designed to cope with broker failure due to having monolithic architectures rather than clustered redundancy).

Depending on the write workload spike throughput and duration, and the consumer read processing rate (which can in theory be increased on demand if there are sufficient topic partitions to increase the number of concurrent consumers on the impacted topics), there may be a need to store messages for hours rather than seconds or minutes in the real-time use case (see example Kafka buffer performance results from my Anomalia Machina experiments).

So, here we have a couple of the main use cases that can increase the age of records being processed and therefore their storage duration requirements under more or less normal use. Now let’s look at some more unusual consumer behaviors.

Disconnected consumers

The overland telegraph line ran across outback Australia (and all the way to England under the sea) in the 1870s – there were plenty of disconnections as it relied on a single piece of #8 fencing wire to cross 3,200 km. (Source: Paul Brebner)

With any pub-sub system–Kafka included–there’s no direct communication or even awareness between producers and consumers, so there is no guarantee that there are any consumers ready and waiting to process records for a given topic, even if any records are published to it.

And Kafka doesn’t “push” messages to consumers; whether and when a consumer has subscribed to a topic (and is interested in receiving messages) is entirely up to the consumers. However, Kafka does ensure guaranteed delivery of messages to eventual consumers (compared to Redis™ for example, which does not).

There may therefore be a significant delay between records being written to a topic and them being read by a consumer (delayed or lazy consumers). Or even if a consumer is present some of the time, it may become or be disconnected and then reconnected intermittently (after fixed or varying/random time intervals), for a variety of reasons (including mobile, remote or edge devices, network outages, and intentionally, etc.) As a result, there is a need to store records and allow them to be consumed at a later time.

There may even be use cases where consumers delay processing of messages on purpose (e.g. time delay or scheduled topics). This is not a Kafka feature per se, but they can be implemented (e.g. by unsubscribing consumers for the delay time and resubscribing after the period has elapsed).

Note that Kafka consumers can choose if they want to read older messages (which may take more time) or just start from the latest message (skipping older messages). Depending on the use case, older messages may just be irrelevant, or impossible to catch up with or process correctly after some time has already elapsed.

Kafka record replaying

Vinyl records can be easily replayed by moving the needle back to the edge of the record. (Source: Adobe Stock)

Kafka records can be replayed too! Maybe it’s your favorite song, or you missed a song the first time around, want to play it to someone else, or want to use it for a different purpose.

There is, however, another Kafka superpower that is a major motivating use case contributing to longer-term storage requirements: Kafka record replaying!

How does Kafka replaying work? Replaying is the main reason behind Kafka’s disk-based log architecture (rather than, say, durability, which is adequately handled by multiple brokers and replication). Messages are not deleted as soon as they are read once, they are retained independently of consumer read success, or even the number of reads.

As we saw in previous blogs on Tiered Storage, they are retained based on space/time retention settings. Now with cheaper (and essentially infinite), tiered storage, Kafka records can be retained for a lot longer (years maybe?). This potentially opens up more use cases for record replaying, particularly those that benefit from increased quality of results with increased quantity or selection of records.

There are some related replaying use cases that I can think of including:

- Adding new downstream systems

- Replacing current systems with new systems

- Migration and replication

- Reprocessing, including event-sourcing

- Replaying to correct errors in the original processing, retraining ML models, or checking things (e.g. legal, governance, quality assurance, etc.)

- Generating the same data and load for testing purposes.

Note that when replaying into an existing system you may need to think about how consumer or downstream system idempotency (the ability to replay the same events more than once without getting incorrect results) matters and is handled! In practice the simplest solutions include new consumer groups and new sink topics.

Event-sourcing relies on the ability to replay events (from the definitive event log) to rebuild the current state of a system. Kafka tiered storage is ideally suited for this, but you do probably need to think about space and time requirements—if you replay all the events since the beginning (of time?) this will take a long time!

A more practical approach is to take regular snapshots of the state of the universe and only replay events from the last snapshot to rebuild the current state–snapshots could be taken every day, week, month etc. Tiered storage enables potentially longer gaps between snapshots, with the trade-off being that it will take longer to reprocess the events.

Typically, you need a suitable sink system to capture the state in. Here’s an interesting talk on event sourcing with Kafka and TinyBird (built on ClickHouse®—which has potential as an alternative to Kafka Streams for real-time stream analytics).

The last example was one of my use cases—I sometimes use Kafka replaying to benchmark sink systems directly from data in Kafka topics via Kafka connect. This ensures you have good control over the data and load, and that the load test is easily repeatable multiple times.

Here are some of the Kafka replay use cases in more detail (I’m looking for concrete examples of some of these to test out in more detail next year—stay tuned!)

New Kafka sink/downstream systems

Huge colony ships could bring settlers to new planets in the future. (Source: Adobe Stock)

Kafka replaying allows for multiple downstream/sink systems to receive the same Kafka events, for different purposes (and potentially in different locations). Using Kafka® Connect, it is common to bring new downstream (sink) systems online and then populate them with the same data that was used to populate the existing sink systems.

Typically, this is done by creating a new Kafka sink connector and replaying all the available historical data from the source Kafka system, so the old and new systems end up having the same state.

The replaying use cases are examples of speeding up time—if you have sufficient consumers and partitions, you can potentially process the historic records faster than “real-time” and eventually catch up to “now”! (Note that increasing the number of partitions doesn’t work for records on remote storage—they only have the number of partitions they were written with in the past).

This is like populating a new planet with a colony spaceship full of settlers (but clones I guess!). But watch out, since it may take some time to reach the same level of civilization (and population) as Earth–and it’s probably going to be a one-way trip!

(Source: Adobe Stock)

Another version of colonization is when something has gone fatally wrong with the original “Earth” system, and you need to abandon the original system eventually. This is more akin to migration!

Replicating or migrating Kafka clusters

The Great Wildebeest Migration is the largest overland migration in the world. (Source: Adobe Stock)

This is a variant of the above use case. Instead of a different downstream/sink technology, you can use Kafka MM2 (MirrorMaker2) to replicate data from topics on a source Kafka topic/cluster to a different destination Kafka cluster/topic; you could do this potentially in a different DC/location (e.g. for increased redundancy, reduced latency, prevent overloading of a primary cluster by moving a subset of the workload to another cluster, etc). Here’s our support page which describes the process to migrate Kafka clusters using MM2 on the Instaclustr Managed Platform.

In theory, this use case can end with the source system being completely abandoned.

An abandoned, inhospitable planet… (Source: Adobe Stock)

What about …

Reprocessing Kafka Streams

I didn’t specifically mention replaying/reprocessing with Kafka Streams. However, some original use cases for Kafka Streams reprocessing from the dark ages of Kafka (2016!) can be found here and are still potentially relevant.

But how can you trick Kafka Streams into reprocessing? By resetting it! There is a Kafka Streams Application Reset Tool that does the trick, the CLI command is $KAFKA/bin/kafka-streams-application-reset (code is here).

Kafka log compaction

Kafka compacted topics are one of the weirder concepts in Kafka (after all, they are more like a simple key-value store than a stream, as they only record the latest value per key). I haven’t made much use of them as a result (but they are used by Kafka internally, and for Kafka Streams state stores). But it’s relevant that one of the few other places that the Apache Kafka documentation explicitly mentions record replaying is in the context of log compaction. Basically, re-playability is key to getting the correct current state (repeatably).

And it looks like we’ve come full circle here with our Kafka tiered storage story. Kafka compacted topics have infinite storage/unlimited retention by default—i.e. they store the newest key/value forever.

Here’s the relevant configuration documentation for compact topics—surprisingly, it appears that topics can have both delete and compact cleanup policies at once. But note that compacted topics do not have remote storage support—in the early access (3.6.0) or production ready (3.9.0).

Instaclustr for Apache Kafka Tiered Storage is now available as a Public Preview, so find out more here and try it out with some of these types of use cases.